Testing Google’s Language Detection

| As Google adds ten more languages to its machine translation service, it seems to be on its way to becoming the most convenient [[universal translator]] of the world’s popular languages. Google’s handling of languages of course isn’t perfect, however—in particular, I’ve been complaining to friends for a while about the weaknesses of Google’s handling of queries in Chinese character ([[Chinese characters | 漢字/汉字]]) scripts. In this post, I run some tests using Google’s Language Detection service to try to better understand its handling of Chinese character queries. |

Background

| Chinese characters have been used all across East Asia, most notably in Chinese, Japanese, Korean, and Vietnamese (the “CJKV”). Prescriptivist writing reforms in Communist China and Japan have simplified many characters, though. Some characters were simplified in the same way, some in different ways, and some in only one country but not the other. For more information, there’s [[Chinese character | Wikipedia]] or Ken Lunde’s CJKV Information Processing. |

The problem

The issue comes up when you try to search for a word in Chinese characters which clearly came from one Chinese character-using language. From my experience, Google doesn’t consider which language you are a user of, based on the query, and returns many results in other Chinese character-using languages as well.1

Take, for example, a query like “七面鳥”, meaning ‘turkey’ in Japanese. While all characters are very common in traditional Chinese (鳥 is simplified to 鸟 in China), the combination “七面鳥” is quite rare in Chinese. However, when you search for “七面鳥,” many of the first results are in Chinese and only two of the first ten results are in Japanese.

Does Google’s corpus not identify “七面鳥” as a primarily Japanese word? Google does indeed attest to this fact: searching for “七面鳥” and limiting to a certain language yields the following number of hits. A similar effect can be seen with Japanese words such as “芝生” (‘grass’) or “泥棒” (‘burglar’). The “Japanese on first page” column gives the number of results that are in Japanese which come up in a language-unspecified search from the US.

| Chinese (simplified) | Chinese (traditional) | Japanese |

Japanese on first page |

|

|---|---|---|---|---|

| 七面鳥 | 786 | 926 | 395,000 | 2/10 |

| 芝生 | 55,600 | 216,000 | 2,230,000 | 0/10 |

| 泥棒 | 13,500 | 22,500 | 10,400,000 | 3/10 |

In a perfect world, I would like Google to identify the language that the query is in, and then weigh results that are in that language higher in the results list. So the issue comes down to one of language detection.

There are broadly two different approaches to language detection and, indeed, all natural language processing problems: parsing and counting. In this case, parsing involves trying to break apart the query into words and then computing how likely such a string of words is in each given language. Counting simply takes an inventory of the characters given and compares them to their frequencies in each language, computing how likely such a string of characters is in each language. Parsing is the “smarter” approach, but more difficult and computationally intensive.

Google was kind enough to give us an language detection AJAX service so we can get a sense for how their language detection works. This service also gives a “confidence” value on the detection result. For the rest of this entry, we’ll test some hypotheses against this service and conclude at the end.

Do spaces matter?

No. While spaces are sometimes used in Japanese and Chinese writing to represent word boundaries, especially around numbers and roman letters, they also are seen on the web to encourage line breaks. It would make sense for Google’s language detection service to ignore spaces in Chinese character queries and that does seem to be the case. All tests I ran with Chinese character queries gave the same result with same confidence with and without spaces in random places.

Does order matter?

No. This was slightly disappointing to see. I took the Japanese string “骨粗鬆症” (‘osteoporosis’, if you’re curious) and ran every permutation against the language detector and got the same results, including the same confidence values. This is a clear indicator that Google uses only counting, not parsing, in their parser.

Does repetition matter?

Yes. Now that it seems that Google does not use any parsing and only uses character frequencies in identifying the source language, let’s see how repetition can affect the detection service.

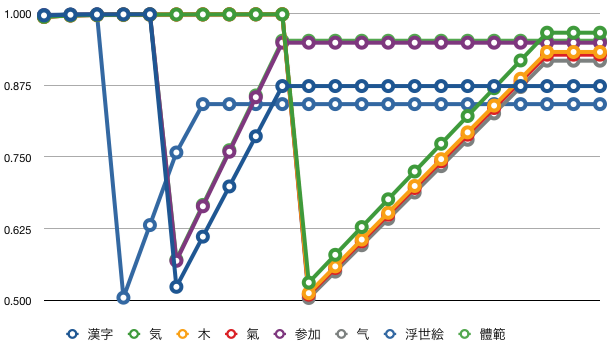

First, I took some Chinese character strings and ran them through the detection service with different numbers of repetitions, e.g. “参加”, “参加参加”, “参加参加参加”, “参加参加参加参加”… The queries I used were the following:

| Chinese (traditional) | Japanese | Chinese (simplified) | |

|---|---|---|---|

| 木 | X | X | X |

| 漢字 | X | X | |

| 氣 | X | ||

| 參加 | X | ||

| 参加 | X | X | |

| 気 | X | ||

| 气 | X |

For each token type, the detection service made up its mind quite quickly. Its confidence, however, was more interesting.

Each of the confidence values dips sharply after three, five, or ten repetitions. Note, however, the length of the tokens which dipped at each of those points. I interpret this to mean that there is a different parser for less than ten characters and ten or more characters. However, the detection service did not change its answer after this point on any of the tokens.

Second, I took two characters, “簡” and “体,” and crossed different numbers of them together to see how that would affect the language detected. Note that “簡” is used in traditional Chinese and Japanese, while “体” is used in simplified Chinese and Japanese.

| 簡x0 | 簡x1 | 簡x2 | 簡x3 | 簡x4 | 簡x5 | 簡x6 | 簡x7 | 簡x8 | 簡x9 | |

|---|---|---|---|---|---|---|---|---|---|---|

| 体x0 | 0.995 | 0.998 | 0.998 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | |

| 体x1 | 0.995 | 0.998 | 0.998 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 |

| 体x2 | 0.998 | 0.998 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.531 |

| 体x3 | 0.998 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.52 | 0.568 |

| 体x4 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.516 | 0.565 | 0.613 |

| 体x5 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.512 | 0.561 | 0.609 | 0.657 |

| 体x6 | 0.999 | 0.999 | 0.999 | 0.999 | 0.999 | 0.507 | 0.556 | 0.605 | 0.653 | 0.702 |

| 体x7 | 0.999 | 0.999 | 0.999 | 0.999 | 0.502 | 0.551 | 0.6 | 0.649 | 0.697 | 0.746 |

| 体x8 | 0.999 | 0.999 | 0.999 | 1 | 0.545 | 0.595 | 0.644 | 0.693 | 0.741 | 0.79 |

| 体x9 | 0.999 | 0.999 | 1 | 0.539 | 0.589 | 0.638 | 0.687 | 0.736 | 0.785 | 0.834 |

| Japanese | Chinese (traditional) | Chinese (simplified) |

Conclusion

For Chinese character-based languages, Google’s language detection algorithm uses simple counting rather than parsing, identifying languages by looking at the frequency of characters rather than the frequency of words. As such, the algorithm simply acts as a script detector, not a language detector. Moreover, as a simple counting method is used, duplicating characters used in one language but not another can very easily skew the resulting output.

As a trivial aside, it seems that Google’s algorithm is slightly different for strings less than ten characters, as can be seen in a dip and then rise of confidence values after ten characters.

-

Just to complicate matters further, there’s also the issue of where you’re accessing Google from. For example, accessing from the US (or via my friend VPN), a query for the Japanese-simplified “天気” seems to only return Japanese pages. However, accessing from Taiwan, Google assumes you may have meant the full-form “天氣”, giving you pages with both “天気” and “天氣”. As a result, Yahoo Japan weather is the first result from the US and third from Taiwan, while Yahoo Taiwan weather is first in Taiwan and doesn’t even show up from the US. This default character substitution in Taiwan is one of my least-favorite Google “features.”

Similar effects can most likely be seen between the US and China. In the rest of this post, all queries will be made from the US. ↩